Welcome to the new blog series on Time series analysis. In the previous blog, we saw how to build a logistic regression using python.

Time series analysis is one of the predictive modeling techniques. It uses in various business applications to forecast quantity and demand in the future. It also helps in understanding the historical pattern of the business.

These are the few use cases for time series analysis –

- Forecasting sale

- Inventory planning

- Stock market analysis

- An understanding of the sudden surge in demand/price of any particular item in the year. etc

Now let’s see the definition of time series. “Time series is an order sequence of data point at an equally spaced time interval”.

In the definition, it’s said ordered sequence of data. which means if the order of data sequence changes then the forecast would change. but this is not in the case of most of the other data analyses. In time series the model not only predicts based on value but also on the sequence of data. That’s why the altered sequence of data shouldn’t be used in case of time series analysis.

Characteristics of Time Series

These are few characteristics exhibited by the time series analysis –

Trends

The trends can observe by looking at the line graph (usually seen over a long stretch of time). It can be either decreasing or increasing. In a few cases, it can remain constant. If we take the example of stock exchange data for last yrs, then we can say there are increasing trends and vice-versa.

Seasonality

It exhibits a regular repeating pattern in the calendar cycle. Such as sales volume increase in festive seasons and remains normal otherwise. The other example could be rain in the monsoon season. Seasonal patterns are usually periodic in nature and predictable.

Autocorrelation

Like correlation measures the extent of a linear relationship between two variables. The auto-correlation measures the linear relationship between lagged values of a time series. Using AR (Autoregression) and MA (moving average) it can be observed.

Long Term Cyclicity

It occurs when the data exhibit rises and falls that are not of a fixed frequency. Economic recession, depression, and recovery are atypical examples of cyclicity. The cyclic variation may be regular but may not be periodic.

Stationarity

Stationary time series is one where statistical properties remain the same. In other words. We can say mean, variance, Autocovariance is time-invariant ( almost constant over time). It becomes easy to forecast the stationary series. Because it’s assumed that the statistical properties observed from the past data, continue to hold true in the future as well. The pure noise is stationary in nature.

Mathematical representation of Time Series

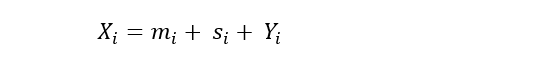

A simple mathematical time series equation could be –

Additive model: The additive model assumes all three components of the time series are independent of each other.

Multiplicative model: In multiplicative model all components behave proportional to each other.

The mixed model is a combination of additive and multiplicative models.

Approach for time series modeling

The first step in any modeling technique is to understand the data by visualizing them. In the case of time series, we usually visualize it by line graph/scatter plot. The visualization gives a clear understanding of trends. Seasonality, outliers, etc.

We also get an understanding whether the data are continuous in nature. For any discontinuity, it is advisable to break them into homogeneous segments.

In the second step, we remove the seasonal and trend components from the time series (non-stationary) and keep noise ( stationary). Then we confirm the filtered part i.e, noise is stationary or not. The left out component (trends and seasonality) is the residue of the time series. We use it after building the model to reconstruct the actual plot (data).

In the next steps, we model the stationary time series. Modeling is using algorithms such as ARMA, ARIMA, exponential Smoothening. Using the model, the demand/future value is forecasted.

Then combine the forecast with the residue (trend and seasonality). We then find the residual series by subtracting the forecasted value from an actual value. And then check if residual series is pure noise.

Let’s not make this long, I am stopping here will post part II in a few days. Part II will have various algorithms used in time series analysis. We’ll also see different challenges while modeling the series.